One of the things that most of us look at when trying to decide if we want to use a new open source library in our software is how “popular” it is. This activity isn’t really even restricted to open source software in that we do the same thing evaluating anything that we’re going to use, whether a product we are going to buy via Amazon or a new restaurant we want to try.

The theory is that if something is a top open source project in terms of its download numbers, it will be better maintained because there are more people who care about it. Also, by having more users, there are fewer corner case problems that haven’t yet been hit by someone—the given enough eyeballs, all bugs are shallow effect.

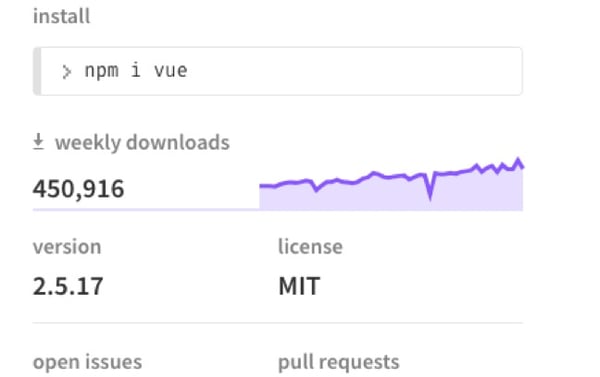

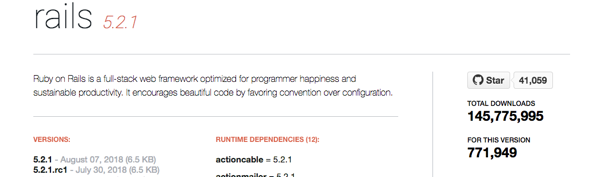

And with open source, the transparency of the underlying systems leads to some initially simple ways to look and come up with numbers. I mean, there’s gotta be data out there about how many downloads a given library has had, right? It’s right there on the RubyGems and npm package pages:

Great! As engineers, we love having a metric that we can use and if A is greater than B, it’s clearly better. Right?

Well, not necessarily.

What happens if I download the library once to evaluate it and never use it? That still counts as a download and makes the number higher.

What happens if a large team is using the library and now has to download it for every single one of their users? More download inflation.

What happens if I have continuous integration set up and download my dependencies as part of every build? Now the download count is a proxy for how active my development team is and not reflective of anything about the popularity of the library itself.

What if I’m a large company with a lot of teams using the application but I have a caching proxy or a local artifact server sending things? Well, now we’ve reduced the numbers from what they might actually be otherwise.

What if I don’t like using the package manager and just vendor everything? Another deflation of the numbers (side note: please don’t vendor everything!).

What if there’s a transparent caching proxy between me and the package server origin? More deflation.

And that’s just a selection of reasons you might not be able to trust the numbers.

None of this is new.... The same was true when trying to assess the popularity of Linux distributions or of packages in a given distribution. So let’s stop caring about and showing package downloads in package managers as an indication of popularity. Instead, try to look at more objective criteria about the health of a project when you evaluate them. You might even want to check out the Tidelift Subscription, which can provide you with even more useful information and tools to help you select which packages to use.

50 Milk St, 16th Floor, Boston, MA 02109

50 Milk St, 16th Floor, Boston, MA 02109